Explore the differences between YOLO World and YOLOv8 in object detection, highlighting speed, accuracy, and adaptability in real-time applications.

Explore YOLO-World's breakthrough in object detection with real-time, open-vocabulary capabilities for enhanced visual recognition.

Explore Apple's MLX, the Python framework optimized for Apple Silicon, offering efficient ML research and development.

Learn the tricks to prompting Claude3 Opus with expert prompting tips and techniques. Improve your Claude3 AI interactions today!

Claude is a next generation AI assistant built for work and trained to be safe, accurate, and secure.

A guide for learning how about rate limiting for Python asyncio retries and how to implement an async python client rate limiter.

Discover how to leverage GoogleAds API for advanced keyword planning and optimization. Maximize your campaign's potential today.

Learn how to effortlessly redirect users with Next-Auth middleware for a smoother, more secure user experience. Perfect for dashboards and private areas.

Learn to how to install PyTorch in Jupyter Notebook. Follow our step-by-step guide for a smooth setup with conda or pip, avoiding common errors.

Learn about ONNX and PyTorch speeds. This article provides a detailed performance analysis to see which framework leads in efficiency.

Discover how ONNX streamlines AI development by enabling model interoperability across various frameworks and platforms.

Experience coding with Open Interpreter, the leading open-source tool for seamless AI code execution and natural language processing on your device.

Uncover the core technical challenges in Large Language Models (LLMs), from data privacy to ethical concerns, and how to tackle them effectively.

Learn to use YOLOv8 for segmentation with our in-depth guide. Learn to train, implement, and optimize YOLOv8 with practical examples.

Unlock the full potential of YOLOv8 with our guide on efficient batch inference for faster, more accurate object detection. Perfect for AI developers.

Explore advanced techniques for chunking in LLM applications to optimize content relevance and improve efficiency and accuracy. Learn how to leverage text chunking for better performance in language model applications.

Learn how to harness the potential of Phixtral to create efficient mixtures of experts using phi-2 models. Combine 2 to 4 fine-tuned models to achieve superior performance compared to individual experts.

Explore the fundamental disparities between LangChain agents and chains, and how they impact decision-making and process structuring within the LangChain framework. Gain insights into the adaptability of agents and the predetermined nature of chains.

Learn how to implement and optimize Proximal Policy Optimization (PPO) in PyTorch with this comprehensive tutorial. Dive deep into the algorithm and gain a thorough understanding of its implementation for reinforcement learning.

Learn the best practices for using Roboflow Collect to passively collect images for computer vision projects, maximizing efficiency and accuracy in your dataset creation process.

Discover the potential of multi-task instruction fine-tuning for LLM models in handling diverse tasks with targeted proficiency. Learn how to refine LLM models like LLaMA for specific scenarios.

Explore the concept of scaling laws and compute-optimal models for training large language models. Learn how to determine the optimal model size and number of tokens for efficient training within a given compute budget.

Discover LLM use cases and tasks and the wide range of industries and applications benefiting from the power of Large Language Models (LLMs)

Learn how to effectively use Jinja prompt engineering templates to optimize GPT prompt creation. Explore best practices and techniques for transforming prompts and templates.

Learn how to build stunning interactive data dashboards with Streamlit, the fastest growing ML and data science dashboard building platform. Follow our step-by-step guide to create your own multi-page interactive dashboard with Streamlit.

Learn how to build a Streamlit video chat app with realtime snapchat-like filters. This article covers the steps to create a seamless video chat experience.

Learn how to implement real-time design patterns in Streamlit for creating interactive and dynamic data visualizations. Explore techniques for animating line charts, integrating WebRTC for real-time video processing.

Learn how to create a basic user interface using Streamlit with this comprehensive tutorial. Perfect for beginners, this guide will walk you through the process of building a simple UI for your data apps.

Learn effective asyncio design patterns that harness the power of asyncio to create efficient and robust applications.

Asyncio is a Python library introduced in Python 3.5, designed to handle asynchronous I/O, event loops, and coroutines. It provides a framework for writing concurrent code using the async/await syntax.

YOLOv8 Pose estimation leverages deep learning algorithms to identify and locate key points on a subject's body, such as joints or facial landmarks. Learn about how you can use YoloV8

Explore the groundbreaking capabilities of Microsoft Phi-2, a compact language model with innovative scaling and training data curation. Learn more about Phi-2.

Learn how to fine-tune GPT-3.5 Turbo for your specific use cases with OpenAI's platform. Dive into developer resources, tutorials, and dynamic examples to optimize your experience .

FastAPI is an innovative, high-performance web framework for building REST APIs with Python 3.6+ based on standard Python type hints.

Transformer models are a type of neural network architecture that learns from context and thus meaning by tracking relationships like words in a sentence. Transformers have accelerated the latest models in AI.

The OpenAI Assistants API is a robust interface designed to facilitate the creation and management of AI-powered assistants.

Prompt engineering is a critical discipline within the field of artificial intelligence (AI), particularly in the domain of Natural Language Processing (NLP). It involves the strategic crafting of text prompts that effectively guide LLMs

Reinforcement Learning from Human Feedback (RLHF) involves the integration of human judgment into the reinforcement learning loop, enabling the creation of models that can align more closely with complex human values and preferences

Prompt-tuning is an efficient, low-cost way of adapting an AI foundation model to new downstream tasks without retraining the model and updating its weights.

Learn about Mixtral-8x7b from the Mistral AI. Learn about its unique mixture of experts architecture, 32k token context and what sets it part from other language models.

Unlock the power of fine-tuning cross-encoders for re-ranking: a guide to enhancing retrieval accuracy in various AI applications.

Learn about generating synthetic datasets for Retrieval-Augmented Generation (RAG) models, enhancing training for improved text generation and context awareness.

Discover ReAct LLM Prompting: a novel technique integrating reasoning and action for dynamic problem-solving with Large Language Models.

Explore Tree of Thoughts Prompting: a cutting-edge framework enhancing Large Language Models like GPT-4 for complex, multi-step problem-solving

Dive into the nuances of chain of thought prompting, comparing techniques and applications in large language models for enhanced AI understanding

Learn how to effectively utilize GPT's function calling capabilities to integrate chatbots with external systems and APIs, opening up new possibilities for AI-powered applications.

Discover key strategies for maximizing LLM performance, including advanced techniques and continuous development insights.

Discover Retrieval Augmented Generation (RAG): a breakthrough in LLMs enhancing accuracy and relevance by integrating external knowledge

Learn how to deploy and host full stack applications on AWS using modern serverless technologies. This comprehensive guide covers everything from frontend development to backend deployment, all on the AWS platform

Learn to migrate Next.js app from Vercel to AWS without any downtime. Discover the best practices and tools for a smooth and efficient transition.

Explore the differences between Next.js and React, including performance, SEO, and developer experience, to determine the best framework for your next project.

Dive deep into the world of React Server Components and learn how to leverage their power to improve performance and simplify complex paradigms in your applications.

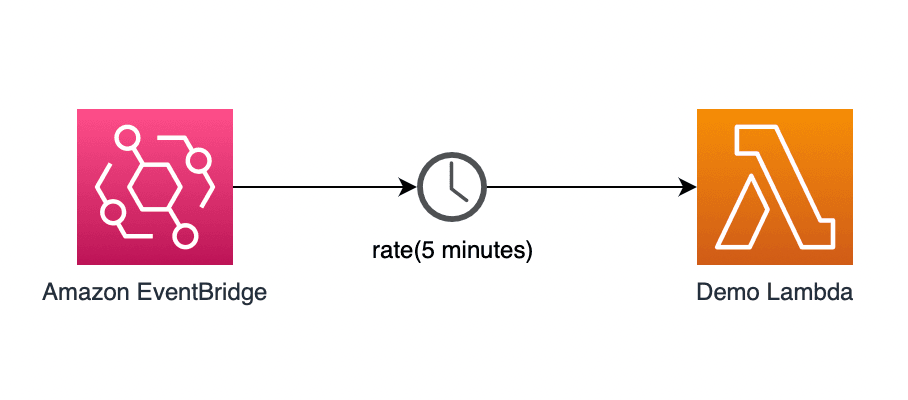

AWS Lambda supports cron jobs running on a schedule or fixed rate. Learn to schedule cron jobs with precision using cron expressions for frequencies up to once per minute.

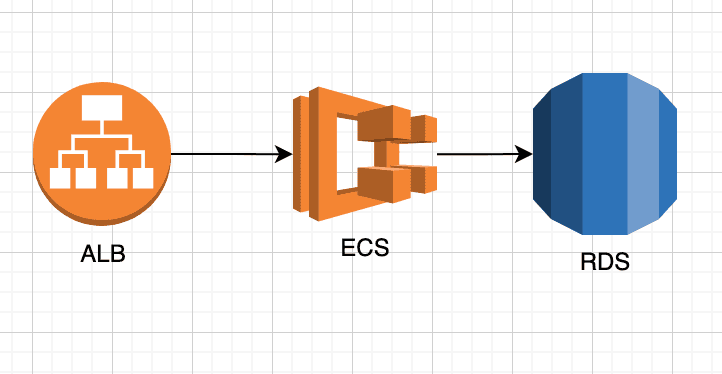

Explore the best alternatives to Vercel for hosting your Next.js applications. This guide dives into the pros and cons of using Netlify, AWS Amplify, and AWS ECS, helping you make an informed choice for your project's hosting solution.\n

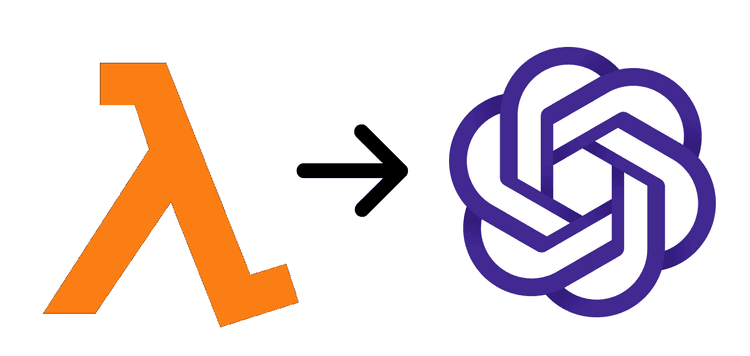

Learn how to build a GPT 4 enabled microservice using AWS lambda, OpenAI GPT4, and serverless express

Learn about AWS Amplify and Next.js features. Explore project previews, monitoring, and CodeBuild CI/CD

Explore the power of Next-Auth and AWS DynamoDb to create robust Next.js applications. This comprehensive guide sheds light on integrating AWS DynamoDB for efficient user data storage.

Explore the nuances of creating static websites without a Node server. Dive into Next.js's static export feature, weigh the pros of adopting Astro, and understand the role of Vite in modern web development.

Strapi is a self-hosted open source Headless CMS. Learn the steps to self-host Strapi on AWS

Explore how to efficiently set up cron jobs using AWS Lambda in TypeScript. Learn why Next.js isn't ideal for cron tasks and how AWS Lambda provides a robust, scalable solution

Explore an in-depth comparison of AWS Amplify and Vercel tailored for hosting Next.js applications. Discover insights on scalability, cost, developer experience, and more

If you're deep into your machine learning journey, you've probably heard about tools like MLFlow and TensorBoard. These tools offer a more organized way to track, manage, and collaborate on your machine learning experiments.

How to Host MLFlow on AWS: A Complete Guide to Architecture and AWS Components

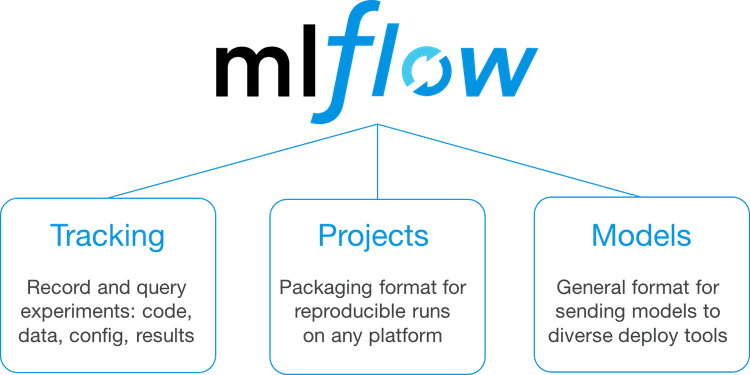

MLflow is an open-source platform designed to manage the complete machine learning lifecycle. It encompasses everything from tracking experiments to sharing and deploying models, thereby creating a seamless workflow for data scientists and engineers